Efficient job Scheduling with SLURM Block Topology on Crusoe with NVIDIA GB200 NVL72

Unlock a 10%+ throughput increase on NVIDIA GB200 NVL72. This guide details how to leverage SLURM's topology-aware block scheduling to align large-scale AI training (Llama 4 Scout 17B) with the NVLink rack architecture.

This blog was created in collaboration with Matthias Reso on the Applied AI team at Meta.

At Crusoe Cloud, we are committed to delivering the highest quality compute platform to our customers. This means collaborating directly with market leaders like NVIDIA to deliver state of the art Infrastructure that is optimized for the latest AI workloads. We are proud to help bring to market new paradigms of computing like the NVIDIA Blackwell architecture.

This new architecture brings two new features that mark a shift in traditional accelerated computing systems. The design leverages the ARM-based NVIDIA Grace™ CPUs for serial tasks, and is designed in a rack-scale form factor with NVIDIA GB200 NVL72 systems. Each GB200 NVL72 system connects 36 Grace CPUs and comes with 18 compute nodes with 4 GPUs each, providing up to 72 NVIDIA Blackwell B200 GPUs in a rack-scale design.

New features such as the NVIDIA NVLink Switch System transforms 72 GPUs into a single, high-performance compute fabric, delivering 130 TB/s of aggregate bandwidth and 1.8 TB/s bi-directional throughput per GPU — 14× faster than PCIe Gen5. This fifth-generation NVLink architecture enables seamless GPU-to-GPU communication across servers, which helps with large scale model training as well as disaggregated inference.

Transitioning to ARM based CPU means certain libraries may need to be recompiled to support ARM architecture. Depending on the developer, this can either be a quick code change, or may require troubleshooting dependencies. To help developers navigate this transition, NVIDIA does release ARM compatible container images in their registry to help users get started quicker, so more often than not only a couple of tweaks are needed to get started.

With the introduction of the new 72 GPU NVLink domain and for multi-rack architectures, topology aware scheduling is more important than ever to ensure that workloads are placed coherently within the system. In this blog, we explore how to enable efficient block scheduling for GB200 NVL72 using SLURM Topology Plug in, and show how aligning training runs to respect the rack scale NVLink domains results in over 10% increase in total throughput during training.

How to get started

To benchmark training performance on GB200 NVL72, we teamed up with the team at PyTorch to run a pretraining job for the Llama 4 Scout 17B parameter model. This allowed our team to both validate that our GB200 NVL72 clusters were set up appropriately, and also allows us to work with the PyTorch team directly to help validate GB200 NVL72 support for PyTorch nightly versions.

In order to get started with SLURM Block Topology, we recommend visiting our GitHub SLURM repository. You can take a look at our blog here to learn more.

SLURM setup

- Set up your environment following our terraform SLURM solution.

- Leverage

ubuntu24.04-nvidia-nvl-arm64-gb200image for ARM support on GB200 NVL72. - In order to create a topology file, you can query the Crusoe CLI to determine which nodes have the same

pod_id. This will confirm which instances are running on the same rack with the shared NVLink Domain. Following our support doc on Topology aware scheduling, you can createtopology.conffile like below.

##################################################################

# Slurm's network topology configuration file for use with the

# topology/block plugin

##################################################################

BlockName=block01 Nodes=node[000-017]

BlockName=block02 Nodes=node[018-035]

BlockName=block03 Nodes=node[036-053]

BlockName=block04 Nodes=node[054-071]

BlockName=block05 Nodes=node[072-089]

BlockName=block06 Nodes=node[090-107]

BlockSizes=18

- Each block in this file represents a single GB200 NVL72 rack with 18 compute nodes.

- Edit the slurm.conf file on your head node to include the following TopologyPlugin.

ubuntu@slurm-head-node-0:~$ cat /etc/slurm/slurm.conf | grep -i topo

TopologyPlugin=topology/block

- Also on the head node, reconfigure SLURM to recognize the new topology.

sudo -i scontrol reconfigureTorchTitan environment setup

To pretrain the Llama 4 Scout 17B model on NVIDIA GB200 NVL72, we use TorchTitan, a high-performance PyTorch library ideal for large-scale distributed training and GB200 NVL72 validation. We set up a Conda environment for the ARM architecture. We use nightly builds of PyTorch and TorchTitan for the latest features. For multi-node deployments, due to the ARM-based Grace CPU within GB200 NVL72, setup may need to occur directly on a compute node if the head node contains an x86 CPU.

The following code block outlines how to set up a conda environment with UV for distributed training.

# Install Miniforge

curl -L -O "https://github.com/conda-forge/miniforge/releases/latest/download/Miniforge3-$(uname)-$(uname -m).sh"

bash Miniforge3-$(uname)-$(uname -m).sh

#Relogin to activate conda environment

conda create -n titan python=3.12

conda activate titan

# Install UV

pip install uv

# Install torch + torchtitan

git clone https://github.com/pytorch/torchtitan

uv pip install --pre torch torchvision --index-url https://download.pytorch.org/whl/nightly/cu128

cd torchtitan

uv pip install -r requirements.txt

uv pip install .

python scripts/download_hf_assets.py --repo_id meta-llama/Llama-4-Scout-17B-16E --assets=tokenizer --hf_token=<HF_TOKEN>Benchmarking Llama4 Scout 17B model

We now benchmark Llama 4 Scout 17B training using this setup. We chose the Llama 4 Scout 17B model because its scale fits within a single GB200 NVL72 rack, allowing us to demonstrate the scaling properties of the IB connected NVLink domains when adding more racks. As the NVLink domain spans the whole rack, we are going to use FSDP as the only parallelism strategy on 16 trays resulting in a training on 64 GPUs.

The key to achieving optimal performance on a multi-rack GB200 NVL72 system is ensuring that the training job is scheduled in a way that respects the physical NVLink topology. This is where SLURM block scheduling comes into play. The SLURM setup described above is configured to enforce this topological alignment:

#!/bin/bash

#SBATCH --job-name=torchtitan_multi_node

#SBATCH --ntasks=16

#SBATCH --nodes=16

#SBATCH --cpus-per-task=96

#SBATCH --segment=16

MASTER_ADDR=$(scontrol show hostnames "$SLURM_JOB_NODELIST" | sort -r |head -n 1)

export MASTER_ADDR=$(getent ahostsv4 $MASTER_ADDR | head -n 1 | cut -d " " -f 1)

echo "MASTER_ADDR: $MASTER_ADDR"

# Networking configs specific to Crusoe

export UCX_NET_DEVICES=ens7

export NCCL_IB_HCA=mlx5_1:1,mlx5_2:1,mlx5_3:1,mlx5_4:1

CONFIG_FILE="/home/${USER}/torchtitan/torchtitan/models/llama4/train_configs/llama4_17bx16e.toml"

export WANDB_PROJECT="Torchtitan Llama 4 Scout"

export WANDB_NAME="bf16"

dcgmi profile --pause

# BFLOAT16 BASELINE

srun torchrun --nnodes ${SLURM_JOB_NUM_NODES} --nproc_per_node 4 --rdzv_id 101 \

--rdzv_backend c10d --rdzv_endpoint "${MASTER_ADDR}:29501" -m torchtitan.train \

--job.config_file ${CONFIG_FILE} "$@" \

--metrics.log_freq=10 \

--training.steps=50 \

--parallelism.data_parallel_shard_degree=-1 \

--parallelism.expert_parallel_degree=16 \

--parallelism.tensor_parallel_degree=1 \

--parallelism.expert_tensor_parallel_degree=1 \

--training.local_batch_size=10 \

--training.seq_len=8192 \

--compile.enable

dcgmi profile --resumeGiven our topology.conf (where each block is 18 nodes, representing a single GB200 NVL72 rack), setting --segment=16 ensures that the job is scheduled only within a contiguous block of 16 nodes. Since the blocks are defined to align with the physical rack boundaries, this configuration keeps the entire training run within a single 72-GPU rack.

Results

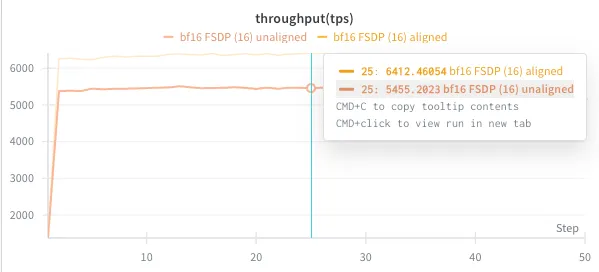

To highlight the importance of aligning with the physical topology, we conducted a comparison where we trained with topology-aware scheduling (--segment=16) and without alignment (--segment=1).

Our testing revealed significant performance differences based on this scheduling choice. When the job is not scheduled in a topology-aware manner — for instance, if the nodes are split across two different GB200 NVL72 systems —we observed a performance regression of over 10% in tokens-per-second throughput. This demonstrates that leveraging SLURM's block scheduling to respect the NVLink-coherent rack topology is essential for maximizing the potential of the NVIDIA GB200 NVL72 platform.

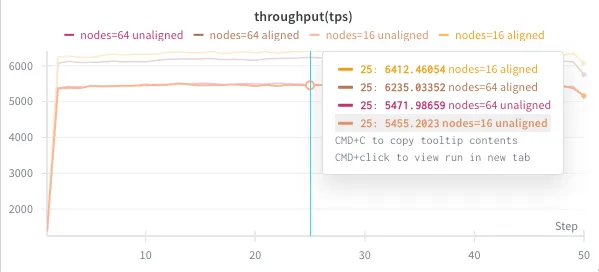

To further validate the benefits of topology-aware scheduling on larger scales, we next scaled the Llama 4 Scout 17B pretraining job from a single rack (16 nodes/64 GPUs) to a multi-rack setup of 64 nodes (256 GPUs). This configuration spans four of the 18-node GB200 racks, demonstrating performance across multiple NVLink-coherent domains connected by NVIDIA Quantum InfiniBand (IB).

The job submission script was modified to request 64 nodes and 64 tasks, effectively utilizing all 256 GPUs across four SLURM blocks:

#SBATCH --ntasks=64

#SBATCH --nodes=64

For the 64-node multi-rack setup (four racks/256 GPUs), we achieved 97% scaling efficiency using InfiniBand between racks. Crucially, topology-aware SLURM block scheduling still yielded a 13% throughput improvement over non-aligned runs. This confirms that maintaining node coherence within NVLink rack boundaries is paramount for maximizing throughput on large-scale NVIDIA GB200 NVL72 training.

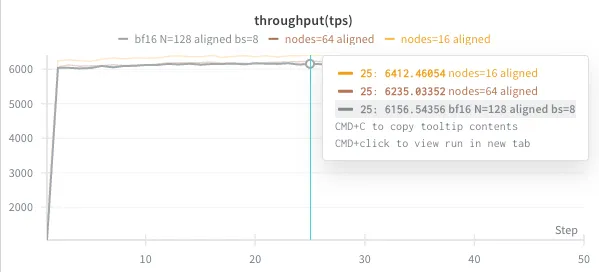

To demonstrate the full scalability of this approach, we further scaled the Llama 4 Scout 17B job to an eight-rack configuration, utilizing 128 nodes and 512 GPUs. Even at this scale, which spans eight distinct NVLink domains connected by InfiniBand, we maintained a remarkably high scaling efficiency of 96% compared to the previous four-rack setup (97%). This confirms that SLURM block scheduling is critical for achieving near-linear scaling and optimal throughput on Crusoe's multi-rack GB200 NVL72 system.

We encourage you to contact us if you're ready to advance your workloads using NVIDIA GB200 NVL72. Our Customer Success team is eager to support your deployments and help you maximize the value of this new architecture.

.jpg)