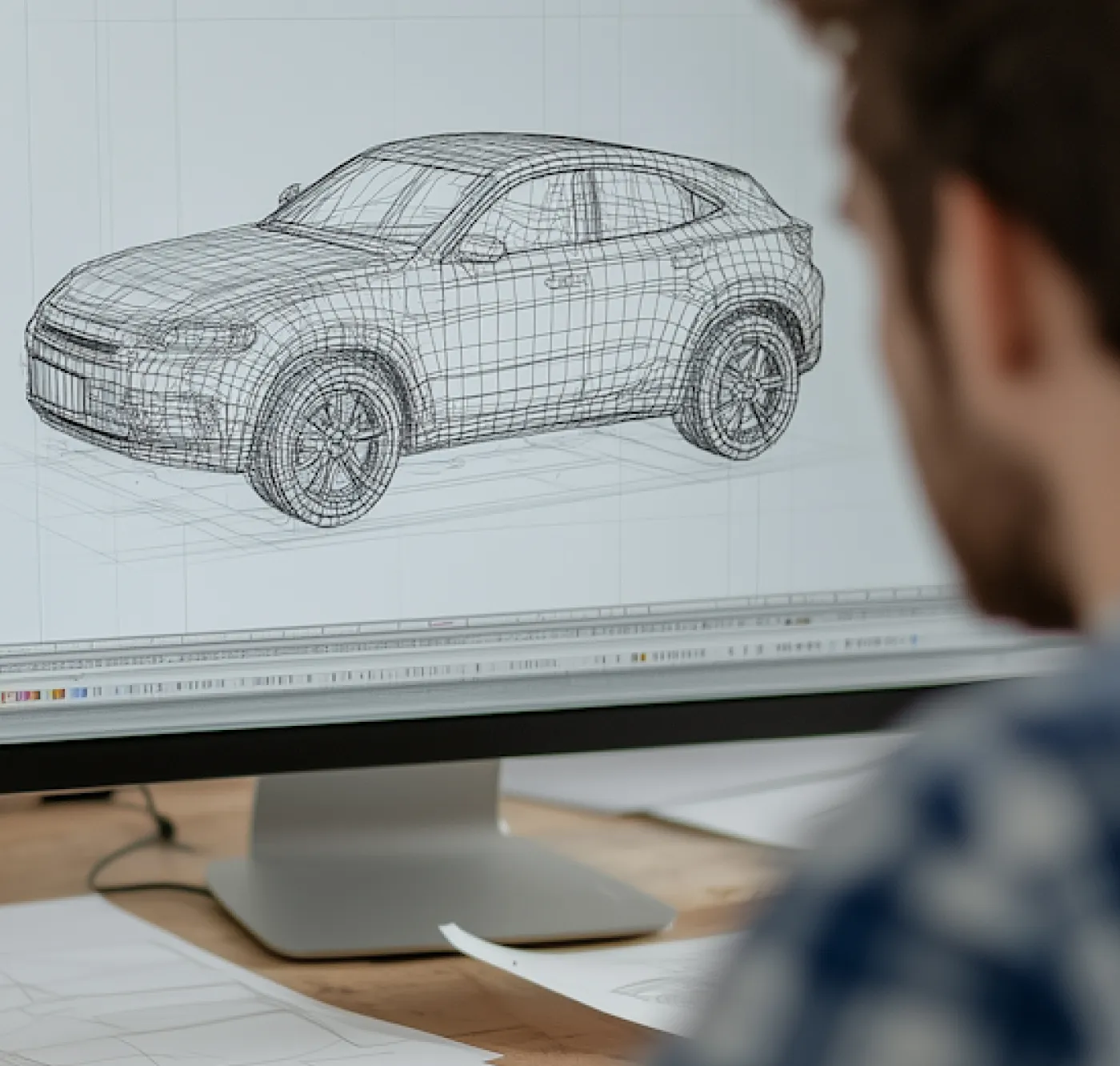

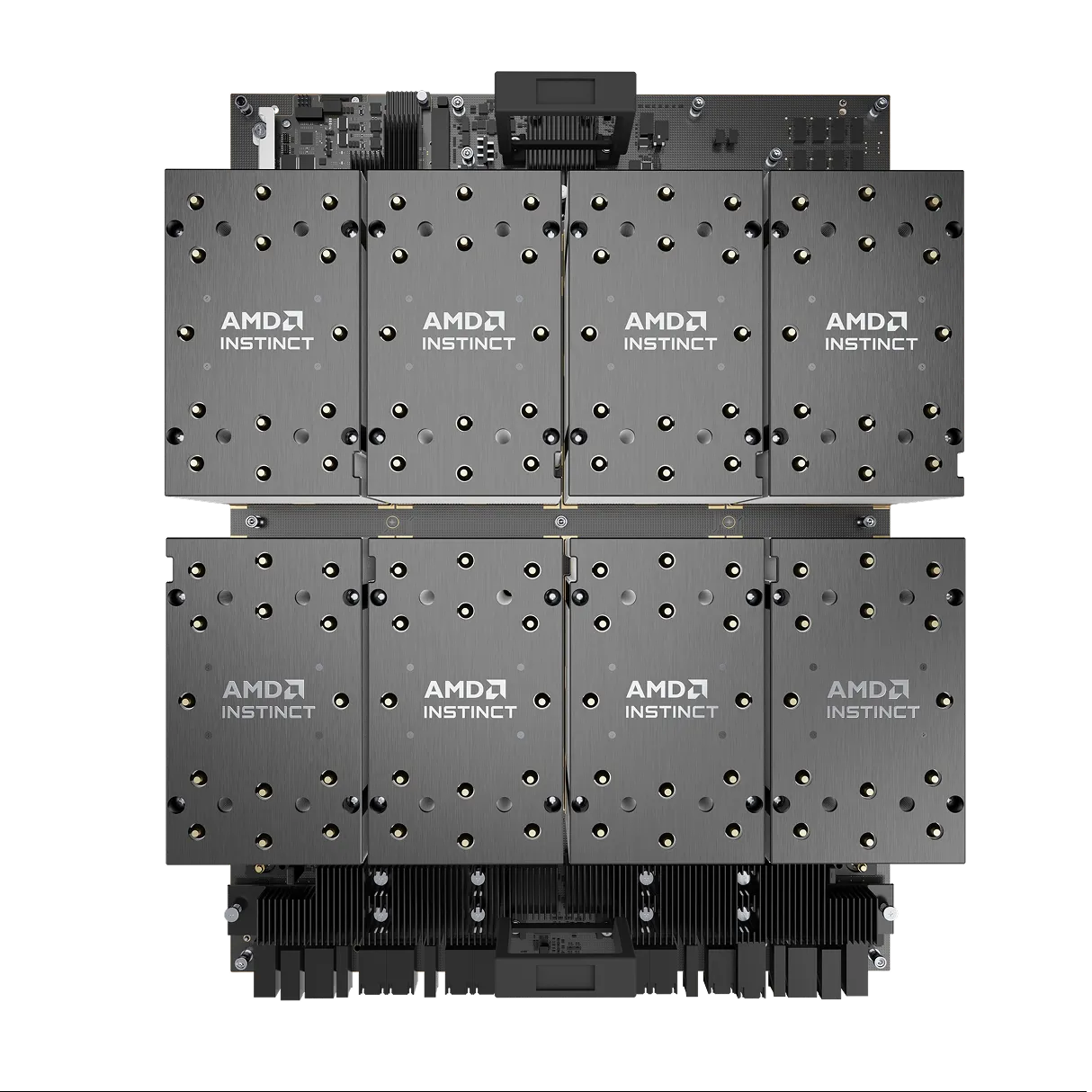

AMD InstinctTM MI300X GPU

The AMD Instinct MI300X GPUs are a leading-edge discrete GPU accelerator designed to deliver leadership performance and efficiency for demanding AI and HPC applications.

Available now on Crusoe Cloud.

Built on next-gen AMD CDNA™ architecture, MI300X delivers up to 13.7X peak AI/ML performance compared to AMD Instinct MI250X.

HBM3 memory is supported with 5.3 TB/s of local bandwidth and direct connectivity of 128 GB/s bidirectional bandwidth between each GPU.

Accelerator designed with 304 high-throughput compute units, AI-specific functions like new data-type support, photo and video decoding, and significant memory.

Build the future faster with Crusoe Cloud

Up to 20 times faster and 81% less expensive than traditional cloud providers.