Breakthrough inference

speed is here

Achieve up to

9.9x faster time-to-first-token*

Process up to 5x more tokens per second*

Optimal price-performance.

No limits.

Run model inference with fast time-

to-first-token, low latency, limitless throughput, and resilient scaling.

Eliminate latency with Crusoe's MemoryAlloy technology.

Scale to more users while maintaining consistent low latency.

Reduce token spend and serve more users without hitting capacity limits.

Read our blog to learn more details.

Crusoe's inference engine is powered by MemoryAlloyTM technology, a unique cluster-native memory fabric that enables persistent sessions and intelligent request routing.

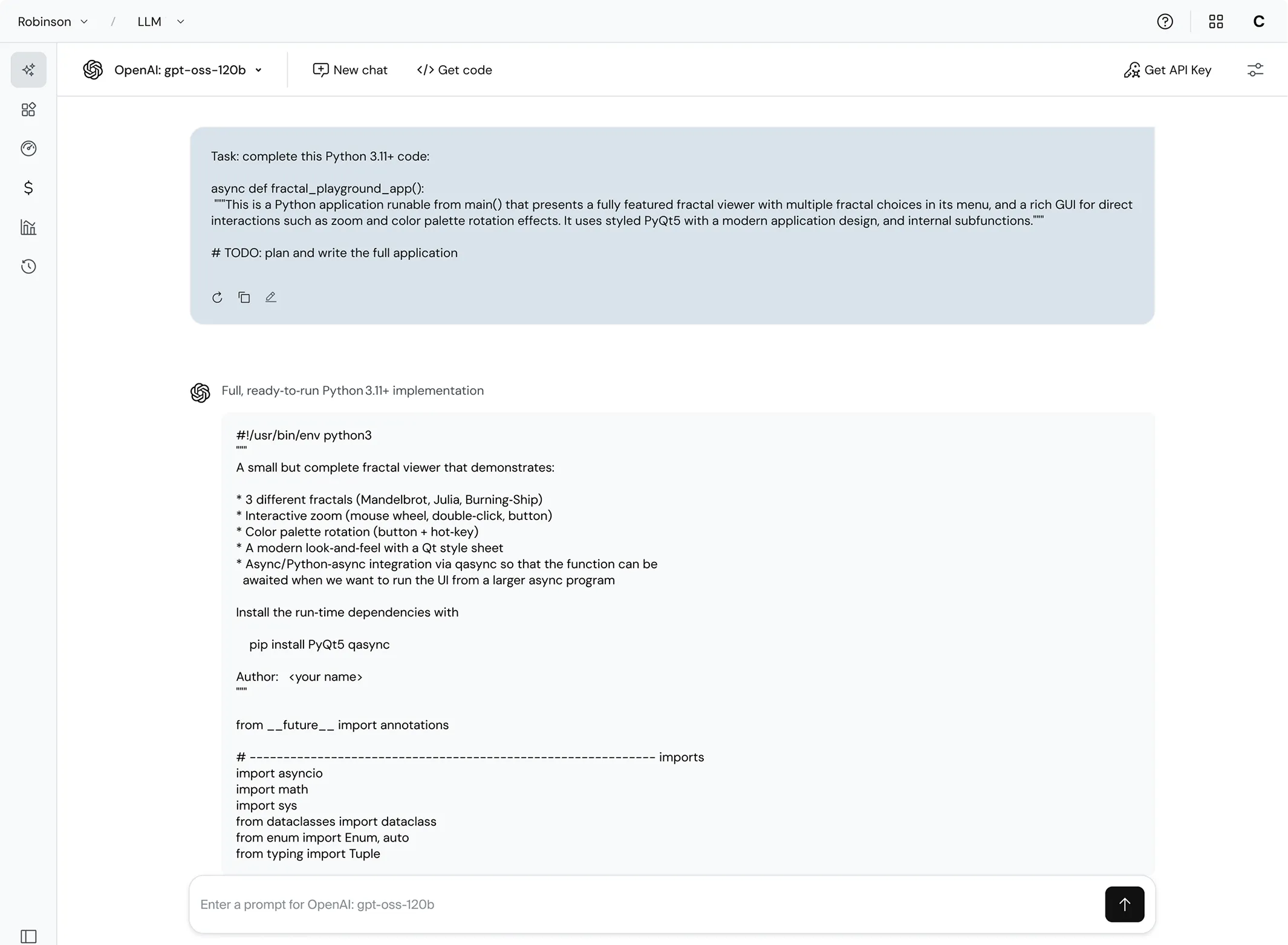

Model catalog

Built with cutting-edge technology to deliver unmatched performance

Crusoe inference engine vs vLLM

Crusoe Intelligence Foundry,

designed for AI developers

Frequently

asked questions

Yes, connect with our team to get started.

Our model catalog will continue to expand, so check again soon. In the meantime, please contact us if there’s a specific model you’d like access to.

Please contact us if you’re interested in this option.

Stay tuned, this is coming soon.

Please contact us if you’re interested in running inference at the edge.

Please contact us at foundry-support@crusoe.ai and our support team will follow-up promptly to answer questions.

.webp)