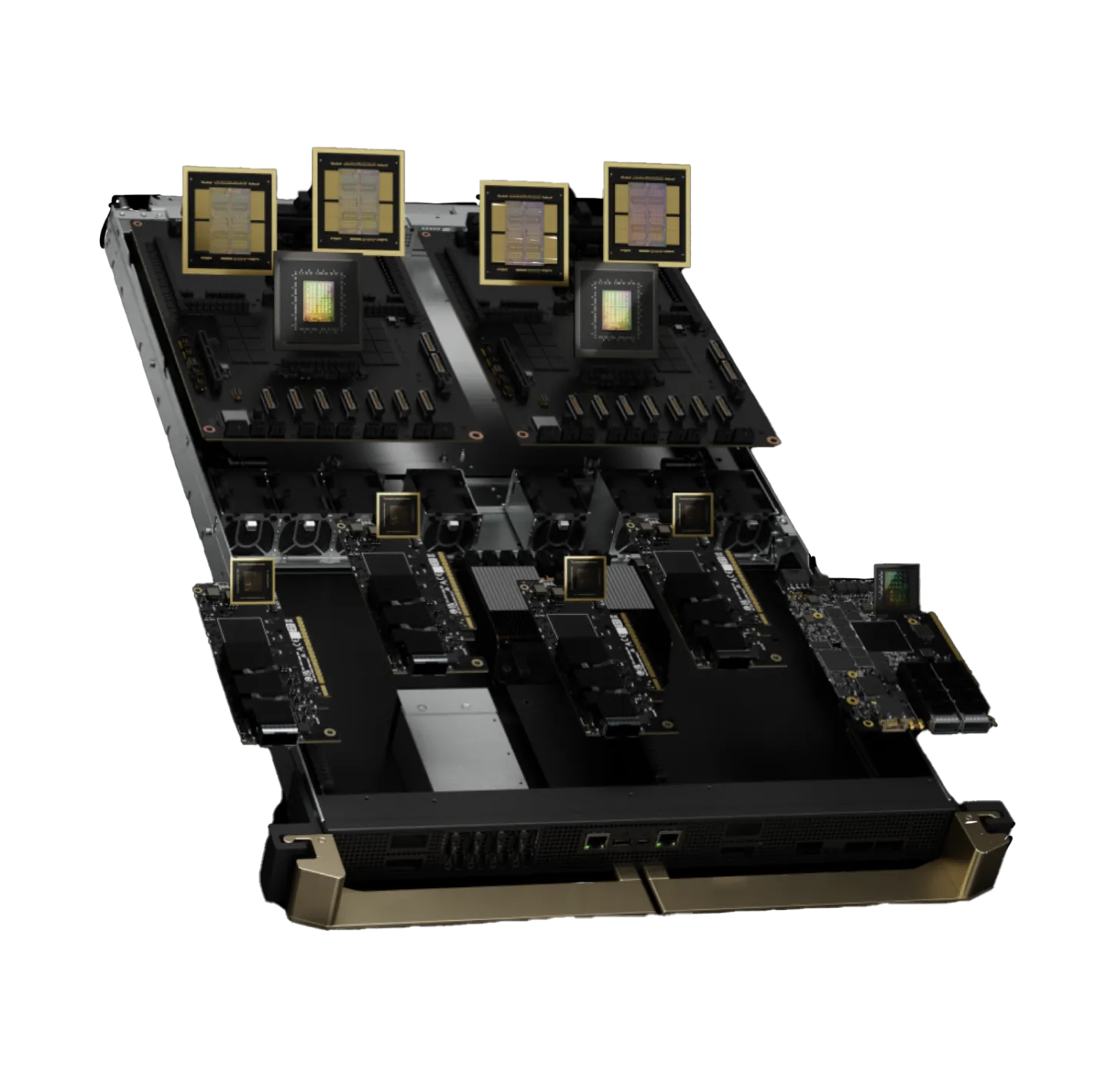

NVIDIA GB200 NVL72

The NVIDIA GB200 NVL72 is a revolutionary liquid-cooled, rack-scale system designed to solve the most complex AI challenges.

Available now on Crusoe Cloud.

Second-generation Transformer Engine introduces FP4 AI, new Tensor Cores, and new microscaling formats for 30X faster real-time trillion-parameter LLM inference.

Second-generation Transformer Engine featuring FP8 precision enables up to 4X faster training for LLMs at scale.

Liquid-cooled GB200 NVL72 racks deliver 25X more performance at the same power compared to NVIDIA H100 air-cooled infrastructure.

High-bandwidth memory, NVLink™-C2C, and Blackwell's new Decompression Engine delivers up to 6X faster database queries.

Build the future faster with Crusoe Cloud

Up to 20 times faster and 81% less expensive than traditional cloud providers.