The new AI benchmark: Unlocking real-world performance with InferenceMAX by SemiAnalysis

Cut through AI hype with InferenceMAX, the new continuous, open-source benchmark from SemiAnalysis. Get real-time data on AI inference performance, TCO, and throughput.

%20copy.webp)

The world of AI is moving at lightspeed. New models are released, software frameworks are updated, and performance optimizations are rolled out in a continuous stream. For builders on the front lines, choosing the right infrastructure is a constant challenge, and a yearly benchmark can feel like a snapshot of a moment that has long since passed.

To make an informed decision, you need a living benchmark — a source of truth that moves at the same speed as the software itself. That's why we're proud to announce our support for InferenceMAX, a new open-source, continuous benchmark project from SemiAnalysis. This tool is designed to cut through the hype and provide real-world performance data for AI inference workloads.

Why InferenceMAX is a game-changer

InferenceMAX is built on the simple idea that the best benchmarks reflect real-world conditions and are always up-to-date. Instead of relying on static, annual reports, it runs a suite of tests nightly on hundreds of GPUs, constantly re-benchmarking the most popular open-source inference frameworks and models to track performance in real-time.

This approach addresses a core challenge for AI builders: the continuous evolution of not only models but of the software stack and model optimization techniques. Small changes in frameworks like vLLM, SGLang, and TensorRT LLM, or updates to foundational libraries like CUDA and ROCm, can significantly impact performance. Techniques such as quantization or speculative decoding as well as KV Cache optimization influence key inference metrics too. InferenceMAX captures this progress as it happens from the inference API user point of view, providing a live indicator of performance gains across the ecosystem.

It measures what truly matters to developers, not just what's easy to test:

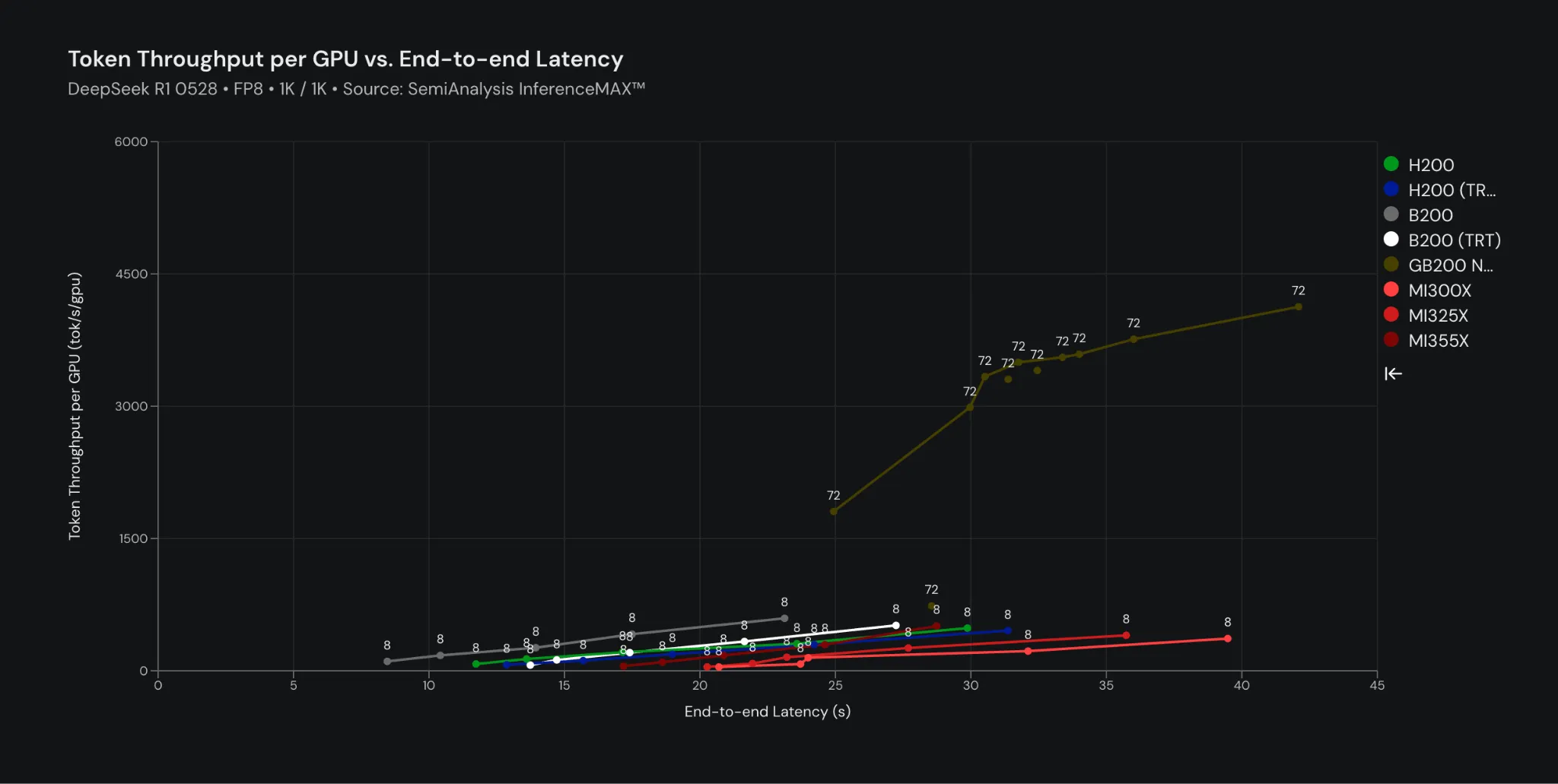

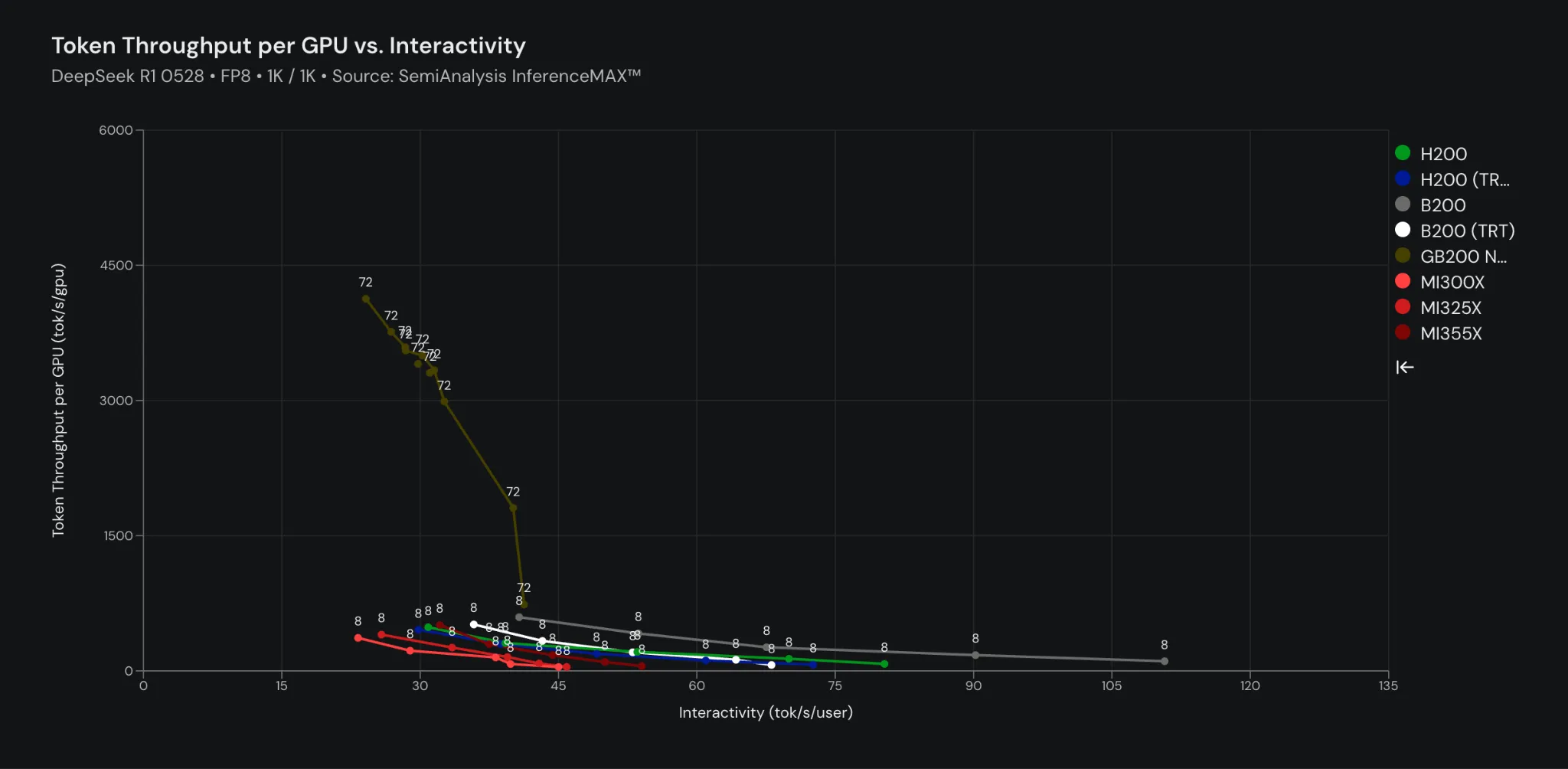

- Throughput vs. interactivity: A core trade-off in serving large language models (LLMs) is balancing the total number of tokens a GPU can generate per second (throughput) with how fast a single user gets a response (interactivity). InferenceMAX plots this fundamental curve, giving you the data you need to optimize for your specific use case, whether that's a real-time chatbot or a batch processing task.

- Real-world workloads: InferenceMAX tests a variety of scenarios that mimic real-world applications. This includes chat (1k input, 1k output), reasoning (1k input, 8k output), and summarization (8k input, 1k output), ensuring the results are relevant to your project.

- Cost efficiency: A GPU's performance is only one piece of the puzzle. InferenceMAX normalizes performance by the total cost of ownership (TCO), giving you a "true north" metric of TCO per million tokens. This is the data that helps you make smart business decisions.

For the initial launch of InferenceMAX v1, the benchmark includes a wide range of GPUs, from the NVIDIA H100 GPU and AMD MI300X to the latest NVIDIA HGX B200 and GB200 NVL72, as well as the new AMD MI355X. It will soon be expanded to include Google TPU and AWS Trainium, making it the first truly multi-vendor open benchmark across the entire industry.

Our commitment to builders

At Crusoe, our mission is to accelerate the abundance of energy and intelligence by providing builders with the infrastructure they need to innovate. To do this, we believe in a foundation of trust and transparency. We are a long-time partner to both AMD and NVIDIA, and we are committed to providing our customers with the best infrastructure to support their ambition.

Open-source initiatives like InferenceMAX are vital for a healthy, competitive ecosystem. By providing clear, unbiased data, they empower you to confidently choose the best platform for your specific workloads.

As Crusoe's Co-Founder and CEO Chase Lochmiller notes, "At Crusoe, we believe being a great partner means empowering our customers with choice and clarity. That's why we're proud to support InferenceMAX, which provides the entire AI community with open-source, reproducible benchmarks for the latest hardware. By delivering transparent, real-world data on throughput, efficiency, and cost, InferenceMAX cuts through the hype and helps our customers confidently select the very best platform for their unique workloads.”

This project is a testament to what's possible when the entire industry collaborates for the benefit of builders everywhere.

Ready to see the data for yourself?

The InferenceMAX live dashboard is publicly available at https://inferencemax.ai. Explore the results and find the perfect hardware and software combination for your next project.

.jpg)